What are Virtual Voices? (And why they're not just chatbots with names)

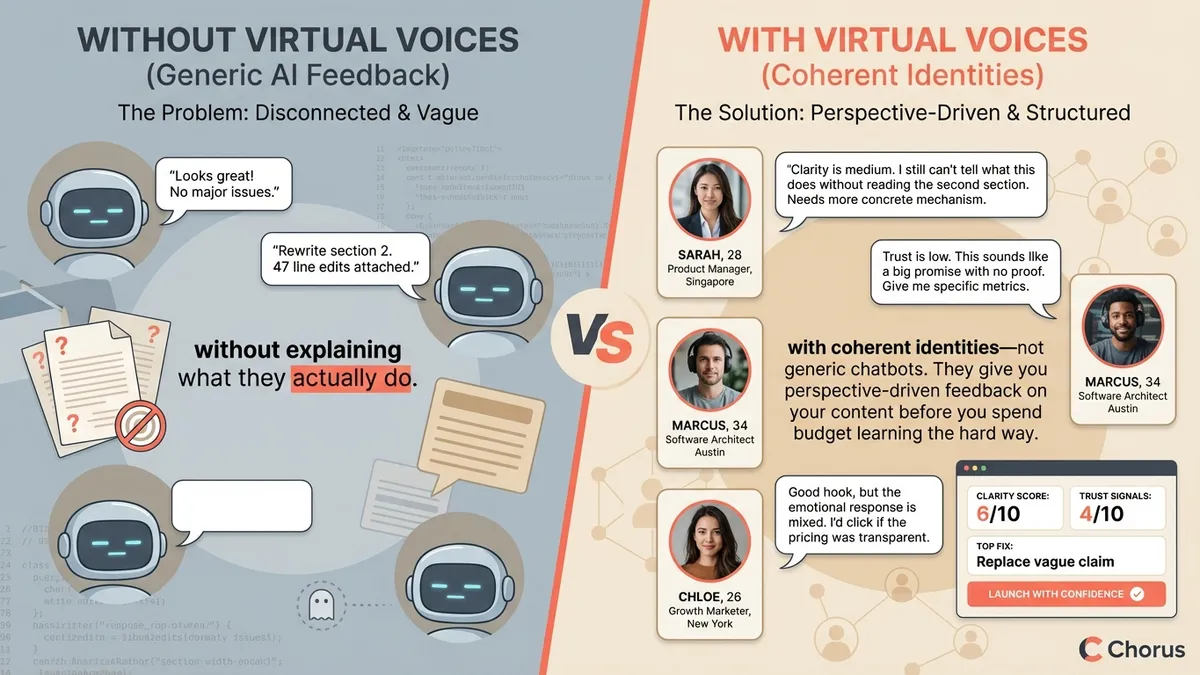

If you’ve ever tried to get feedback on a landing page, you know the drill: you send it to three colleagues. One says “looks great!” Another gives you 47 line edits. The third ghosts you entirely. None of them are your actual target customer.

This is the feedback gap that virtual voices are designed to close.

TL;DR

Virtual Voices are AI personas with coherent identities—not generic chatbots. They give you perspective-driven feedback on your content before you spend budget learning the hard way.

Think of them as practice quizzes before the A/B test final exam.

What virtual voices actually are

A Virtual Voice is an AI-generated persona with a coherent identity—demographics, psychology, preferences, communication style, and lived context. In the market research industry, you might hear similar concepts called synthetic personas, synthetic users, AI personas, or synthetic respondents. We use “Virtual Voices” because the term emphasizes what matters: each persona has a distinct voice—a consistent point of view that shapes how they react to your content.

Think of it like this: if you asked ChatGPT “what do you think of this ad?”, you’d get a generic, somewhat agreeable response. But if you asked Marcus, a 34-year-old software architect in Austin who’s skeptical of marketing claims but genuinely interested in developer tools—you’d get something different.

Virtual Voices aren’t chatbots. They’re perspective engines—what researchers call synthetic respondents with behavioral modeling. Each persona has:

- Demographics: Age, location, occupation, income bracket, education

- Psychographics: Values, motivations, decision-making style

- Context: Life circumstances, media consumption, brand relationships

- Communication patterns: How they express opinions, what they notice first

The goal isn’t to replace real users. It’s to give you informed directional feedback before you spend money finding out your headline doesn’t land.

What Are Synthetic Respondents? (Quick Definition)

Synthetic respondents are AI-generated participants used in market research instead of (or alongside) real human respondents. The term comes from traditional survey research, where “respondents” answer questions—synthetic respondents are AI systems that simulate how real people would answer.

The meaning of synthetic respondents varies by context:

- In quantitative research: AI that predicts survey responses based on demographic patterns

- In qualitative research: AI personas that provide detailed, naturalistic feedback (this is what Virtual Voices do)

- In market simulation: AI agents that model purchasing decisions

Virtual Voices are synthetic respondents optimized for qualitative creative feedback—they don’t just predict “yes/no” answers, they explain why in their own words.

Virtual Voices vs. Industry Terminology

You’ll encounter different names for similar concepts across the market research and creative testing industry:

| Industry Term | Who Uses It | How Virtual Voices Compare |

|---|---|---|

| Synthetic users | Synthetic Users, general industry | Virtual Voices are synthetic users optimized for creative feedback |

| Synthetic personas | OpinioAI, Accenture | Virtual Voices include deeper psychological modeling and communication patterns |

| AI agents | Aaru, enterprise research | Virtual Voices are agents specialized for content evaluation, not general prediction |

| Synthetic respondents | NIQ, Qualtrics | Virtual Voices provide richer qualitative feedback than typical synthetic respondents |

| Creative testing | Kantar LINK AI, System1 | Chorus combines synthetic personas with structured rubrics—hybrid qualitative + quantitative |

We built Virtual Voices specifically for creative testing and ad pre-testing—a narrower focus than general-purpose synthetic users or prediction engines, which lets us optimize for the feedback marketers actually need before launch.

AI Personas for Marketing: Common Use Cases

When marketers search for AI personas for marketing or AI personas for market research, they’re usually trying to solve one of these problems:

| Use Case | What They Need | How Virtual Voices Help |

|---|---|---|

| Ad pre-testing | ”Will this ad resonate before I spend budget?” | Run creative through target personas, get clarity/trust scores |

| Landing page feedback | ”Is my message clear to my ICP?” | Persona-specific comprehension checks, jargon detection |

| Message testing | ”Which headline/hook works better?” | A/B test messaging with consistent persona panels |

| Audience research | ”How do different segments react?” | Compare responses across demographic/psychographic profiles |

| B2B marketing | ”Will this resonate with technical buyers?” | Specialized personas (developers, CTOs, procurement) |

AI personas for B2B marketing are particularly valuable because B2B audiences are harder to recruit for traditional research. You can’t easily assemble a focus group of CTOs—but you can model their decision-making patterns, skepticism triggers, and information needs.

The key insight from teams we talked to: AI personas aren’t replacing customer interviews. They’re filling the gap between “no research” (shipping blind) and “formal research” (expensive, slow). Most content ships with zero validation. AI personas make lightweight validation the default.

What makes a voice feel consistent (instead of random)

The trick isn’t giving a persona a name. It’s giving it a stable point of view. In Chorus, each voice is anchored to a clear identity (who they are, what they care about, what they distrust), and then put into a specific scenario (what they’re looking at, what they’re trying to decide). Think of it like sci‑fi worldbuilding: the rules of the universe stay the same, even when the plot changes.

An example: Meet Sarah

Here’s what a virtual voice actually looks like:

Sarah Chen, 28 Product Manager at a Series B startup in Singapore

Decision style: Data-driven but time-constrained. Skims first, digs deep only if the hook lands.

Trust signals: Social proof from peers, specific metrics, transparent pricing. Instantly skeptical of enterprise-speak and vague promises.

Media diet: Lenny’s Newsletter, First Round Review, Product Hunt. Avoids anything that feels like traditional advertising.

Current frustration: Too many tools promise AI magic without explaining what they actually do.

When Sarah reviews your landing page, she’s not scanning for keywords. She’s reacting as Sarah—with her specific context, biases, and attention patterns. That’s the difference between “AI feedback” and a virtual voice.

How virtual voices evaluate content

When a virtual voice reviews your content, it simulates a cognitive response:

- First impression — What grabs attention (or doesn’t)

- Comprehension — Is the message clear to this specific person?

- Credibility assessment — Does this feel trustworthy to them?

- Emotional response — Interest, skepticism, excitement, confusion

- Action likelihood — Would they click, sign up, or bounce?

The output isn’t a vague sentiment score. It’s structured feedback with specific quotes, concerns, and suggestions—the kind you’d get from a thoughtful focus group participant.

Here’s a simplified example of the shape of that output (not the exact format):

- Clarity: Medium — “I still can’t tell what this does without reading the second section.”

- Trust: Low — “This sounds like a big promise with no proof.”

- Top fix: Replace one vague claim with a concrete mechanism or proof point.

Why this matters for your workflow

The traditional feedback loop looks like this:

- Create content

- Show it to teammates (who are too close to the product)

- Launch

- Wait for real users to tell you what’s broken

- Fix it

- Repeat

Virtual voices let you insert a pre-launch checkpoint:

- Create content

- Run it through relevant personas

- Get specific, perspective-driven feedback

- Fix issues before launch

- Launch with confidence

It’s not about replacing user research. It’s about not flying blind in the 90% of cases where you don’t have time or budget for formal research.

The “but is it really accurate?” question

Fair question. Here’s the honest answer: virtual voices are directionally useful, not oracular. They catch:

- Clarity problems that insiders miss

- Jargon that alienates outsiders

- Trust signals that resonate (or don’t)

- Objections you forgot to address

- Tone mismatches with your target audience

They don’t replace:

- Quantitative A/B testing

- Deep qualitative research

- Actual customer conversations

Think of them as a spell-check for messaging. You wouldn’t ship without running spell-check, even though it doesn’t catch every error. Virtual voices work the same way—they catch the obvious problems so you can focus your real-user research time on the hard questions.

Keeping feedback structured (not just vibes)

Perspectives are powerful, but they can still be messy if everyone is reacting to different things.

That’s why Chorus pairs Virtual Voices with Testing Kits—structured evaluation criteria (clarity, trust, differentiation, CTA readiness) so feedback is comparable across runs and across personas.

If you want the practical version of this, see: Testing Kits and Bundles.

Getting started with virtual voices

In Chorus, you can:

- Choose from pre-built personas that match common segments (developers, marketers, executives, etc.)

- Customize demographics to match your specific audience

- Run panels with multiple perspectives for balanced feedback

The goal is to make “getting outside perspective” as easy as running a spell-check—something you do routinely, not something that requires a project plan.

Confused by industry terminology? See our glossary of synthetic personas and creative testing terms for how Navay’s terminology maps to industry standards.

Want to see Chorus in action? Book a demo or see example reports.