AI Creative Testing Tools: 3 Ways to Test Ads Before Launch

When we started talking to product marketers about their pre-launch workflows, we kept hearing the same frustration: “I need feedback, but I don’t know what kind.”

Some wanted a quick score before a meeting. Others needed to understand how different audience segments would react. A few wanted the full focus group experience—diverse perspectives, debate, surprising insights—but without the weeks of planning and 30,000 budget to hear eight people politely lie to them about their ad.

So we built three distinct test types in Chorus. Each one answers a different question, and knowing which to use can save you hours of second-guessing. (New to AI creative testing terminology? See our glossary for industry terms.)

TL;DR

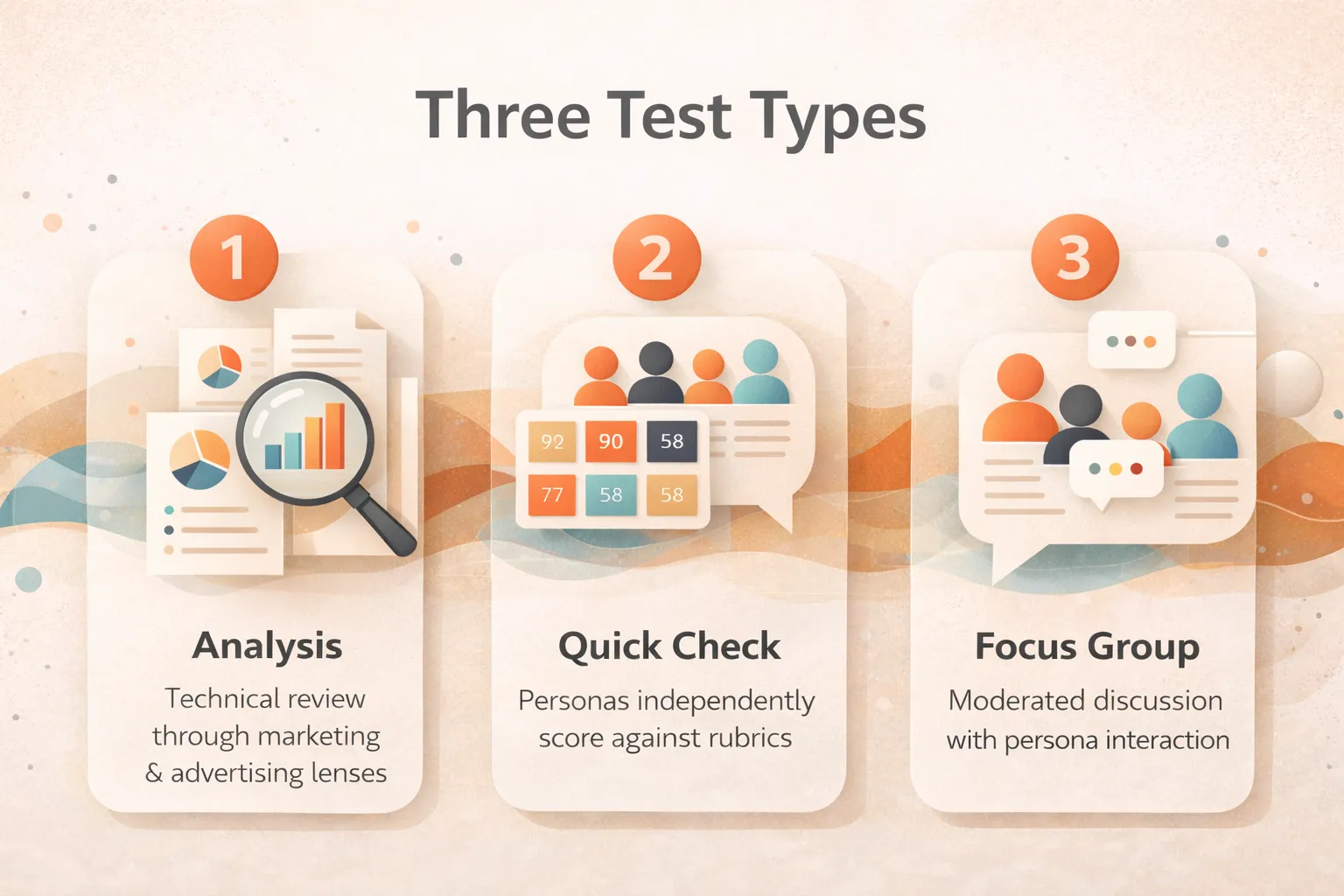

Analysis examines your content through marketing and advertising lenses for a technical breakdown. Quick Check has Virtual Voices independently score your content against specific rubrics. Focus Group simulates a moderated discussion where personas interact and respond to each other. Each test runs independently—use one, two, or all three based on what you need to learn.

Jump to:

- What Is AI Creative Testing?

- Pre-Launch vs Post-Launch Testing

- Analysis (Technical Breakdown)

- Quick Check (Quantitative Scores)

- Focus Group (Qualitative Discussion)

- How to Choose the Right Test

- Chorus vs Kantar vs Zappi

What Is AI Creative Testing & Why It Matters

AI creative testing (also called ad pre-testing, creative pre-testing, or predictive creative testing) is the process of evaluating marketing content using AI before you spend budget on it. Instead of waiting weeks for traditional research or relying on gut instinct, AI creative testing tools give you structured feedback in minutes.

The category has grown rapidly as AI-generated creative has exploded. When you can produce 50 ad variants in a day, you need a way to evaluate them at the same speed—without sacrificing the qualitative depth that makes feedback actionable. (See our glossary for how these industry terms map to Chorus features.)

The core question: Which ads will work, and why? AI creative testing tools approach this from different angles:

- Predictive scoring — Will this ad perform well? (probability-based)

- Audience simulation — How will specific segments react? (persona-based)

- Structural analysis — What’s technically working or broken? (audit-based)

Chorus offers all three approaches through its three test types. Here’s how they work—and when to use each.

Pre-Launch Testing vs Post-Launch Optimization

Before diving into test types, it’s worth clarifying what AI creative testing isn’t: it’s not A/B testing.

Traditional A/B testing happens after you launch—you show real ads to real audiences and measure CTR, CPA, and ROAS. That’s valuable, but it means spending budget to learn what works. If your creative has a fundamental messaging problem, you’ve already paid to discover it.

AI creative testing flips the sequence. You evaluate content before spending budget, using synthetic personas to surface issues while changes are still free. Think of it as a pre-flight check: you wouldn’t skip the checklist just because you’ll eventually learn whether the plane flies.

| Dimension | Pre-Launch AI Testing (Chorus) | Post-Launch A/B Testing |

|---|---|---|

| When it runs | Before budget is spent | After ads are live |

| What you measure | Persona reactions, qualitative feedback | CTR, CPA, ROAS, conversions |

| Cost of learning | Minutes of AI processing | Ad spend + time in market |

| What you learn | Why something works or doesn’t | Whether it performs |

| Sample size | Not applicable (synthetic personas) | Requires statistical significance |

| Speed to insight | Minutes | Days to weeks |

The two approaches aren’t competitors—they’re sequential. Use AI testing to validate and iterate quickly, then use live A/B testing to optimize performance once you’ve shipped something you’re confident in.

What is Analysis?

Analysis examines your content through multiple professional lenses—marketing effectiveness, advertising best practices, brand alignment, and technical execution. It breaks down what’s working, what’s missing, and what might confuse your audience.

Think of it as having a senior strategist review your landing page, ad creative, or pitch deck and tell you exactly what they see. You get structured feedback on clarity, persuasion, messaging flow, and audience fit—all in one comprehensive report.

What Analysis tells you

- Content breakdown section by section

- Marketing lens review evaluating positioning and value proposition

- Advertising effectiveness checking hooks, CTAs, and persuasion techniques

- Messaging analysis evaluating clarity and flow

- Gap identification highlighting missing elements

- Improvement suggestions with specific recommendations

When to use Analysis

Analysis is your go-to when you want to understand your content’s structure and effectiveness. Customers told us they reach for it when:

- Something feels off but they can’t articulate it

- They need to brief stakeholders on a piece of content

- They want a technical review of messaging and structure

- They’re evaluating competitor content

What is Quick Check?

Quick Check is your quantitative scorecard. Select Virtual Voices (representing your target audience) and evaluation criteria that matter for your content type—then each Voice independently scores your content against those dimensions.

The key word is independently. Each Virtual Voice evaluates your content on its own—there’s no discussion or interaction between them. You get direct, unfiltered reactions from each perspective, scored against the specific dimensions you care about.

What Quick Check tells you

- Scores from 0-100 for each rubric, from each persona

- Pass/warn/fail verdicts so you know what needs attention

- Priority fixes ranked by impact across all voices

- Score matrix showing how different audience segments responded to each criterion

When we showed early users the score matrix view, the reaction was consistent: “Finally, I can see which personas have concerns instead of just getting a single average.” The heatmap visualization makes it obvious when your Gen Z audience loves something that falls flat with decision-makers.

When to use Quick Check

Quick Check works best when you need structured scores across specific evaluation criteria. Customers told us they use it for:

- Pre-meeting validation (“Does this deck pass our quality bar?”)

- A/B variant comparison (“Which headline scores higher on clarity?”)

- Iteration tracking (“Did my revision actually improve trust signals?”)

- Multi-audience testing (“How do different segments score this?”)

The output is numbers-first—each persona’s independent judgment, quantified.

What is Focus Group?

Focus Group simulates what happens when you put real people in a room and ask them to react to your creative. Except instead of eight people who showed up for the gift card, you get a carefully constructed panel of Virtual Voices that actually match your target audience.

Unlike Quick Check, Focus Group creates interaction between personas. A moderator guides the discussion, curates quotes from initial reactions, and asks other personas to respond to what their peers said. This surfaces consensus, disagreement, and the kind of “wait, I hadn’t thought of that” moments that make real focus groups valuable.

Instead of scoring against rubrics directly, personas answer open-ended questions. The moderator synthesizes their responses into themes, insights, and derived scores based on the discussion.

What Focus Group tells you

- Overall sentiment and a signal strength score

- Standout quotes with attribution to specific personas

- Key themes that emerged across the panel

- Consensus and disagreement showing where voices aligned or diverged

- Cross-persona reactions where one voice responds to another’s opinion

- Recommendations synthesized from the discussion

- Question-by-question breakdown with representative quotes

The magic is in the interaction. When we analyzed early Focus Group results, we noticed something interesting: the most valuable insights often came when one persona pushed back on another’s opinion. Like the lone dissenter in Twelve Angry Men, that “I see it differently” moment frequently surfaced blind spots the initial consensus missed.

When to use Focus Group

Focus Group shines when you need qualitative understanding—the texture of how people respond, including how they react to each other’s perspectives. Customers use it for:

- Concept validation (“Does this messaging resonate, and why?”)

- Audience discovery (“How do different segments react to each other’s views?”)

- Pre-research preparation (“What questions should we ask in our real focus group?”)

- Creative direction (“Which emotional tone lands better, and what’s the debate?”)

Customers told us Focus Group helps them identify themes worth exploring in follow-up research—without committing to a full study upfront.

How to Choose the Right Creative Test Type

Each test type answers a different question:

| Your question | Use this |

|---|---|

| ”What’s the technical quality of this content?” | Analysis |

| ”How does each audience segment score this?” | Quick Check |

| ”What would people say about this in a room together?” | Focus Group |

| ”Which version is objectively better on specific criteria?” | Quick Check |

| ”Why isn’t this landing page resonating?” | Focus Group |

| ”Is my messaging structured correctly?” | Analysis |

| ”What themes should I explore in research?” | Focus Group |

These tests are completely independent—none of them feeds into the others. You can run any combination based on what you need to learn.

How AI Creative Testing Tools Compare: Chorus vs Kantar vs Zappi

If you’re evaluating AI creative testing tools, you’ve likely encountered established players like Kantar LINK AI, Zappi, and System1. Here’s how Chorus’s approach differs:

| Dimension | Kantar LINK AI | Zappi | System1 | Chorus |

|---|---|---|---|---|

| Primary approach | Predictive model (historical data) | Survey + AI scoring | Emotion measurement | Synthetic personas + structured rubrics |

| Speed | Hours–days | Hours | Days | Minutes |

| Output type | Predicted scores, benchmarks | Scores + verbatims | Star rating, emotion curve | Multi-persona scores + qualitative reasoning |

| Qualitative depth | Limited | Moderate | Limited | High (verbatim quotes, persona debate) |

| Customization | Standardized framework | Template-based | Standardized | Custom personas, custom rubrics |

| Best for | TV/video validation at scale | Rapid concept testing | Predicting market performance | Iterative teams, variant testing, understanding “why” |

When to choose established tools: You need benchmark data against years of historical performance, you’re validating major TV campaigns, or your organization requires industry-standard metrics.

When to choose Chorus: You’re iterating fast and need qualitative understanding, you want to know why something works (not just whether), or you need persona-level detail on how different segments react. Chorus’s Focus Group mode surfaces the debate between personas—insights you can’t get from scores alone.

Where Chorus Fits in Your Testing Workflow

The question isn’t “Chorus or A/B testing?”—it’s “what do I need to learn, and when?”

Early iteration (Chorus territory):

- You have 10 headline variants and need to narrow to 3

- Something feels off about your landing page but you can’t articulate it

- You’re preparing for a creative review and want to pressure-test messaging

- You need to understand how different audience segments will react before committing

Final validation (Kantar/System1 territory):

- You have a polished asset ready for major media spend

- You need industry benchmark scores for stakeholder approval

- You require historical performance prediction based on ad databases

Post-launch optimization (A/B testing territory):

- Your creative is live and you’re optimizing for conversion

- You need statistically significant performance data

- You’re measuring actual CTR, CPA, and ROAS

Many teams use all three layers. Chorus catches conceptual issues early (when fixing them is cheap), benchmark tools validate the final cut, and live A/B testing optimizes performance once you’re in market.

Running multiple tests

Customers often run multiple test types on the same content to get different perspectives. Upload once, select your tests, and get technical analysis and persona scores and simulated discussion—each running independently in parallel.

The results complement each other without overlapping. Analysis might identify that your CTA is buried too deep in the page. Quick Check shows persona-by-persona scores on CTA effectiveness. Focus Group reveals the conversation: “I almost missed the signup button” followed by another persona responding “Really? It was the first thing I noticed”—and suddenly you understand why your scores were split.

Three lenses, three types of insight, one piece of content.

Want to see which test type fits your workflow? Try Chorus free or book a demo to see all three in action.