AI Ad Pre-Testing: What Quick-check Tests Actually Measure (With Real Report)

When we talk to marketing teams, the first question they ask is: how can we get focus group-quality feedback in minutes instead of weeks?

That question kept coming up while we were building Chorus. Traditional creative testing has a brutal math problem: by the time you get feedback, you’ve already burned through the window where that feedback would have helped. What if you could get structured evaluation from multiple perspectives before committing budget?

That’s what Quick-check tests do. And in this post, I’ll walk you through exactly how they work—including a real report from a Coinbase video ad that scored 72/100.

TL;DR

Quick-check tests run your creative through multiple Virtual Voices who score it against a structured rubric. You get a composite score plus criterion-by-criterion breakdown in minutes, not weeks. The Coinbase ad scored 90/100 on attention capture but only 34/100 on persuasion—exactly the kind of gap you need to see before spending.

Browse sample reports or book a demo to try it with your own creative.

The 30-Second Version

Think of a Quick-check test like psychohistory for your creative—Hari Seldon’s predictive science from Foundation, but instead of predicting galactic civilizations, we’re predicting how real audience segments will react to your ad.

Instead of recruiting 5 strangers, scheduling a room, and waiting 3-6 weeks, you define your target audience as Virtual Voices, select a rubric (like “Video Ad Effectiveness”), and get structured feedback in minutes. Virtual Voices are AI-powered personas calibrated to represent specific audience segments. Each Voice evaluates your creative against specific criteria—not vague opinions, but scored dimensions like “CTA Clarity” or “Message Comprehension.”

The output is a report with a composite score (72/100), individual criterion breakdowns, and the specific issues each Voice identified. It’s designed to answer one question: should we iterate, or should we ship?

What is a Quick-check test?

A Quick-check test is Chorus’s fast-feedback evaluation mode—what the industry calls AI creative scoring, ad pre-testing, or predictive creative testing. You submit creative (video, landing page, ad copy), select a rubric that defines what “good” looks like, and get evaluation from multiple Virtual Voices (synthetic personas) representing your target audience.

It’s not a focus group replacement. It’s a preflight check—similar to what tools like Kantar’s LINK AI or Zappi offer for ad testing, but designed for the speed marketers actually need. (See our glossary for how these industry terms map to Chorus features.)

Here’s what makes it different from asking ChatGPT “is this ad good?”:

-

Multiple perspectives: You get evaluation from 5+ distinct Virtual Voices, each with different demographics, attitudes, and concerns. Not just one AI’s opinion.

-

Rubric-based scoring: Instead of vibes, each Voice scores against defined criteria. CTA Clarity. Message Comprehension. Emotional Connection. Quantitative dimensions you can track across variants.

-

Structured evidence: Every score comes with reasoning. When a Voice gives you 34/100 on persuasion, it tells you why—and what would change its mind.

Kantar LINK AI vs Quick-check: How AI Ad Testing Tools Compare

If you’ve researched AI ad testing, you’ve probably encountered Kantar LINK AI—one of the most established names in predictive ad testing. Teams often ask us how Quick-check compares.

| Dimension | Kantar LINK AI | Quick-check (Chorus) |

|---|---|---|

| Approach | Predictive model trained on ad performance data | Synthetic personas evaluate against rubrics |

| Output | Predicted attention, engagement, brand impact scores | Multi-persona scores + verbatim reasoning |

| Speed | Hours to days | Minutes |

| Customization | Standardized framework | Custom rubrics, custom personas |

| Best for | Large brands, TV/video ad validation | Iterative teams, rapid variant testing |

| Qualitative depth | Limited—primarily quantitative | High—persona quotes, specific objections |

When to choose Kantar: You’re a large brand running major TV campaigns and need benchmark data against their historical database. The predictive model is trained on years of ad performance.

When to choose Quick-check: You’re iterating fast, need to test multiple variants, want to understand why something works (not just whether it will), and can’t wait days for results. Quick-check gives you the qualitative “why” that pure prediction misses.

They’re not direct substitutes—Kantar predicts market performance, Quick-check surfaces audience reactions. Some teams use both: Kantar for final validation, Quick-check for rapid iteration.

How does the evaluation actually work?

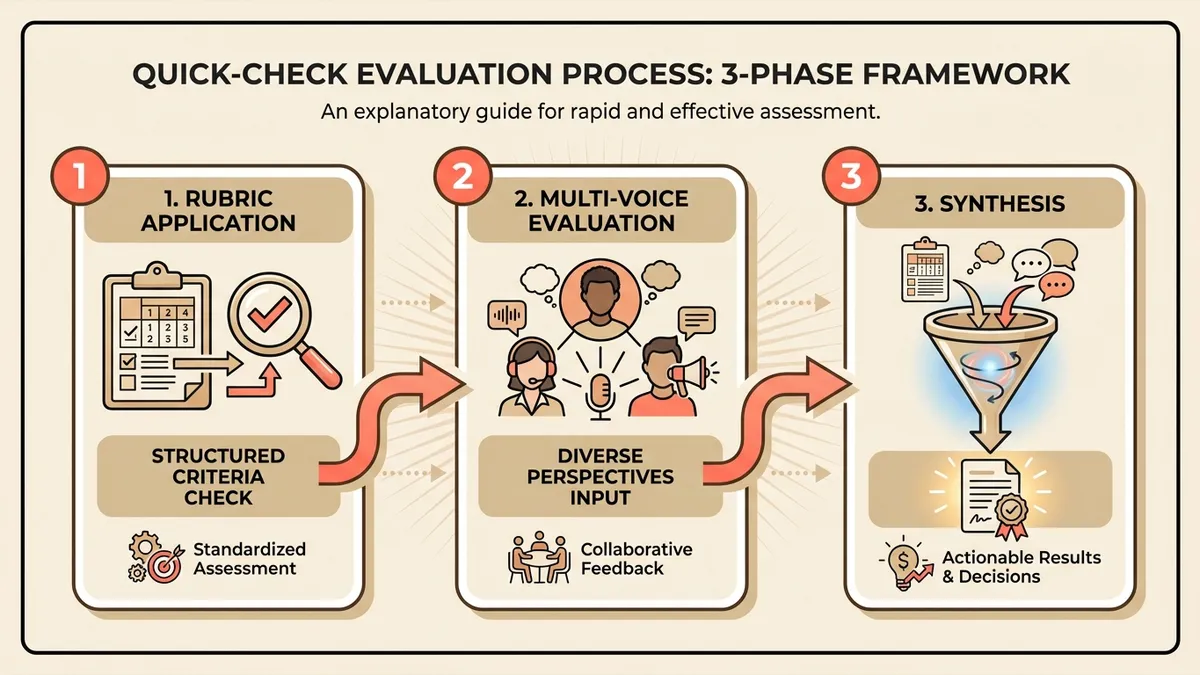

Under the hood, a Quick-check test runs through three phases.

Phase 1: Rubric Application

You select (or customize) a rubric—a structured evaluation framework with criteria and sub-criteria. For the Coinbase video ad, we used “Video Ad Effectiveness” which includes:

| Criterion | What It Measures |

|---|---|

| CTA Clarity | Is the action clear and compelling? |

| Message Comprehension | Do viewers understand the core message? |

| Message Persuasion | Does it actually change minds? |

| Emotional Connection | Does it resonate emotionally? |

| Brand Awareness | Will they remember who made this? |

| Attention Capture | Will they watch past the first 3 seconds? |

Each criterion has sub-criteria and scoring definitions. This isn’t a black box—you can see exactly what the Voices are evaluating against.

Phase 2: Multi-Voice Evaluation

Each Virtual Voice watches the creative and scores every criterion. The Coinbase ad was evaluated by 5 Voices, resulting in 30 individual criterion scores (5 Voices × 6 criteria).

Why this matters: A single AI opinion is useless. But when 5 different personas—each calibrated to represent real audience segments—all flag the same issue, you’ve got signal.

Phase 3: Synthesis and Ranking

Chorus aggregates the scores into a composite (72/100), ranks the issues by severity and frequency, and identifies patterns. The highest-impact opportunities bubble up first.

What does the report actually contain?

Let me walk you through the real Coinbase “Everything is Fine” video ad report.

1. Quick Check Overview

The report opens with the headline:

Overall Score: 72/100 — Promising, needs iteration

| Metric | Value |

|---|---|

| Voices Evaluated | 5 |

| Rubric Used | Video Ad Effectiveness |

| Criteria Scored | 6 |

| Score Range | 67–78 |

Right away, you know: this isn’t a disaster, but it’s not ship-ready either. The 67-78 range tells you the Voices are roughly aligned—no wild outliers.

2. Rubric Criteria Breakdown

This is where it gets interesting:

| Criterion | Score | Interpretation |

|---|---|---|

| Attention Capture | 90/100 | Strong hook, viewers will stay |

| Emotional Connection | 88/100 | Resonates with target audience |

| Message Comprehension | 86/100 | Core message is clear |

| CTA Clarity | 71/100 | Action is somewhat clear |

| Brand Awareness | 64/100 | Brand recall is weak |

| Message Persuasion | 34/100 | Critical weakness |

The problem jumps out immediately. The ad captures attention beautifully (90/100) and creates emotional connection (88/100). But it fails catastrophically on persuasion (34/100). The creative team nailed the hook and missed the close. Marketing teams told us this is the pattern traditional focus groups miss—participants say “I liked it” because they did, it was emotionally engaging. But liking doesn’t mean buying.

3. Top Issues Table

The report ranks issues by how many Voices flagged them:

| Issue | Severity | Voices Affected |

|---|---|---|

| Weak call-to-action | High | 4/5 |

| Brand reveal too late | Medium | 3/5 |

| Value proposition unclear | High | 3/5 |

Four out of five Voices flagged the weak CTA—that’s not one person’s opinion, that’s a pattern worth acting on.

4. Sample Persona Evaluations

The report includes detailed evaluations from 2 preview Voices:

Persona: Marcus (35, Tech Professional)

- Strengths: “Immediately grabbed my attention with the absurdist humor”

- Issues: “Had no idea what they wanted me to do at the end”

- Key quote: “Great ad, but… download what? Do what?”

Persona: Sarah (42, Small Business Owner)

- Strengths: “The ‘everything is fine’ message resonated with my skepticism”

- Issues: “Didn’t realize it was Coinbase until the last 5 seconds”

5. Score Matrix

A full grid showing all 5 Voices × all 6 criteria. This lets you see if certain audience segments respond differently. Maybe your tech-savvy Voices love the humor but your risk-averse Voices are confused.

6. Full Persona Breakdowns

Each Voice gets a detailed section with:

- Overall evaluation summary

- Criterion-by-criterion scores and reasoning

- Specific quotes and observations

- Suggested improvements from that perspective

The “so what”—what does this enable?

Marketing teams we talked to described two options before Quick-check tests:

Option A: Ship blind. Launch the creative, watch the metrics, iterate when they underperform. Cost: wasted ad spend and opportunity cost.

Option B: Wait for research. Commission a focus group, wait 3-6 weeks, get a deck of insights that arrive after the campaign window closes. Cost: time and momentum.

Quick-check tests offer a third option: structured preflight in minutes. You can run one before the budget meeting. You can compare variants before the media buy. You can validate the hook, the close, the CTA—all while there’s still time to change them.

For the Coinbase ad, the insight was clear: great attention, terrible persuasion, and a brand reveal that comes too late. That’s actionable. That’s something you can fix in the next cut—not the next quarter.

Pre-Testing vs Post-Testing: Where Does Quick-check Fit?

In advertising research, there’s a fundamental distinction between pre-testing (evaluating creative before launch) and post-testing (measuring performance after launch). Each serves a different purpose:

| Type | When | What You Learn | Tools |

|---|---|---|---|

| Pre-testing | Before spend | Will this work? What should we fix? | Quick-check, Kantar LINK AI, focus groups |

| Post-testing | After campaign | Did it work? What drove results? | Brand lift studies, attribution, surveys |

The pre-testing gap: Traditional pre-testing (focus groups, concept tests) is thorough but slow—often 3-6 weeks. By the time results arrive, the campaign window has closed. Many teams skip pre-testing entirely and rely on post-testing to learn what worked.

The problem with post-testing only: You learn what failed after you’ve spent the budget. Post-testing is essential for optimization, but it’s expensive tuition.

Where Quick-check fits: It’s a pre-testing tool designed for modern timelines. You get structured feedback before launch—fast enough to actually iterate. Teams told us they use Quick-check as a “preflight gate”: creative doesn’t go to media buy until it clears a minimum score threshold.

The ideal workflow uses both: Quick-check for rapid pre-testing on variants, then post-testing to validate that pre-test predictions matched real-world performance.

Who is this actually for?

Quick-check tests are designed for teams who:

- Ship creative faster than traditional research can keep up

- Need to validate concepts before committing budget

- Want structured evaluation, not just “I like it / I don’t like it”

- Are tired of waiting weeks for feedback that arrives too late

Brand marketers with longer planning cycles tell us they still value full focus groups for major campaigns. Quick-check is the complement, not the replacement—the preflight you run on every variant, not just the hero creative.

See it in action

Want to see what a Quick-check report looks like?

Start by browsing our research page—we’ve published full reports on real ads and landing pages, including the Coinbase evaluation referenced in this post.

Ready to try it on your own creative? Book a demo and bring a piece you’re about to ship. Pick something you’re uncertain about—the ad that’s “probably fine” but hasn’t been validated. We’ll run it through Chorus live and walk you through the report.

Because 72/100 with a clear path to 85 is better than launching at 72 and wondering why it underperformed.

Want to see more Quick-check reports in action? Check out the Coinbase video ad evaluation or book a demo.