Message Testing at Scale: How to Test Dozens of Variants Without Burning Budget

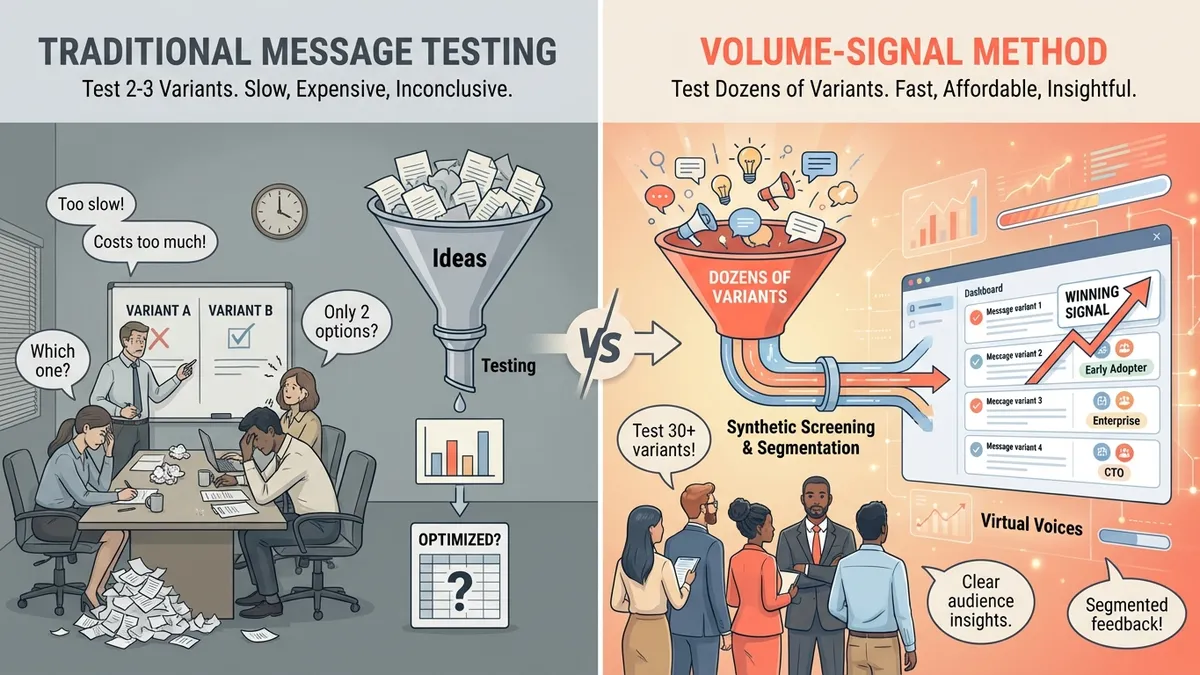

You wrote nine subject lines. Your team debated them for forty minutes. You picked two, ran an A/B test, and the winner lifted open rates by 0.3%. Somewhere, a project manager updated a spreadsheet and called it “optimization.” Nobody felt optimized. Message testing — the process of putting copy variants in front of audiences before you commit budget — is supposed to prevent this kind of slow, inconclusive grind. But the way most teams do it, the testing itself becomes the bottleneck.

TL;DR

Most marketing teams can only afford to test 2-3 messaging variants, which means they’re picking from a tiny slice of the possibility space. Virtual Voices — synthetic personas calibrated to real demographics — let you test dozens of message variants in hours instead of weeks, at a fraction of traditional research costs. Start with one campaign and ten variants. You’ll learn more in an afternoon than a quarter of A/B tests.

The Hidden Cost of Small Message Tests

When we talked to product marketing managers about their copy testing tools and workflows, the same frustration kept surfacing: they knew they were under-testing. Not because they were lazy, but because the math didn’t work. Traditional message testing methods — focus groups, surveys, panel-based testing — price per variant. Every additional headline, tagline, or value prop you want to test adds cost and time. So teams self-censor before they even start. They kill thirty ideas to test three, and then wonder why the winner barely beats the control.

The real expense isn’t the research bill. It’s the opportunity cost of all the variants you never tested. The same frustration comes up in conversation after conversation with growth teams: they suspect their best messaging is sitting in a Google Doc somewhere, untested, because the budget only covered a handful of options. It’s like picking lottery tickets with your eyes closed — except you only get to pick two. That’s not message testing. That’s message guessing.

What Is Message Testing (And Why Most Teams Under-Do It)?

Message testing is the practice of showing different copy variants — headlines, taglines, value propositions, CTAs — to representative audiences and measuring which ones land. It’s how you move from “I think this sounds good” to “this actually works with our target buyer.” The tools range from old-school (focus groups, intercept surveys) to modern (copy testing tools, A/B platforms, synthetic audience testing).

The problem isn’t that teams don’t value message testing. It’s that the cost-per-variant model forces them to test too few options to find anything surprising. If you can only test three variants, you’ll test three safe variants. The breakthrough messaging — the weird angle, the counterintuitive hook, the one your VP would have vetoed — never makes the cut.

The Volume-Signal Method: A Better Way to Test Messaging Variants at Scale

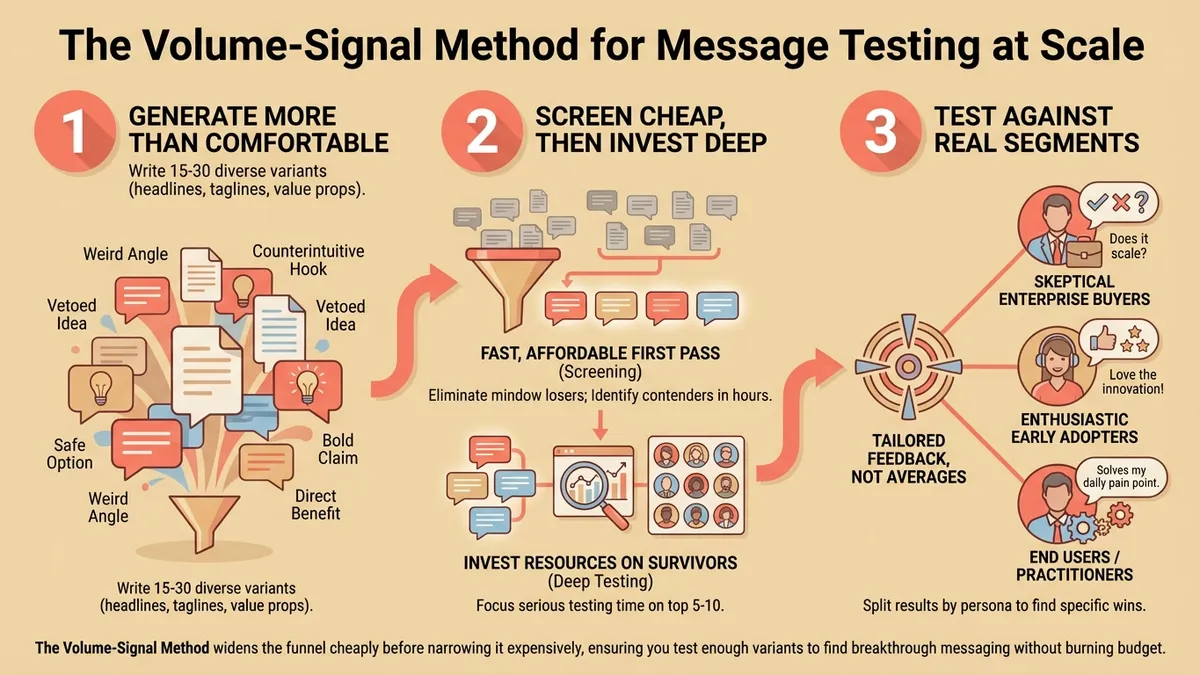

When we studied teams that consistently shipped strong copy, we noticed a pattern. They didn’t test harder. They tested wider. We started calling it the Volume-Signal Method — not because we invented it, but because the best teams we talked to were already doing some version of it without a name.

1. Generate More Than You’re Comfortable With

Most teams write 3-5 variants and start narrowing. The teams we studied wrote 15-30. Not because every variant was precious, but because volume creates surface area for surprise. Same idea as psychohistory in Asimov’s Foundation — one prediction is noise, but patterns across large numbers become clear. Your job in this phase is to produce raw material, not polish. Write fast. Write weird. Write the version that makes you nervous.

2. Screen Cheap, Then Invest Deep

Here’s where the economics shift. Instead of running every variant through an expensive testing process, you screen the full batch through a fast, affordable first pass. This is where message testing without focus groups becomes practical — the Volume-Signal Method works by widening the funnel cheaply before narrowing it expensively. You’re not asking for a final verdict at this stage — you’re asking “which ten of these thirty deserve a closer look?” A fast screen eliminates the obvious losers and surfaces the unexpected contenders. Then you invest serious testing time only on the survivors.

3. Test Against Real Segments, Not Averages

The biggest mistake in marketing message testing isn’t testing too few variants. It’s testing against a monolithic “general audience.” A message that scores average across everyone might score brilliantly with your actual buyer and terribly with everyone else — or vice versa. The teams that got the most value from high-volume testing split their feedback by segment: skeptical enterprise buyers got different reactions than enthusiastic early adopters. Same message, different signal depending on who’s reading.

How to Start (Without Overthinking It)

You don’t need a new tech stack or a big research budget. Here’s a version you can run this week:

Pick one campaign. Don’t overhaul everything. Choose a launch, a landing page rewrite, or an email sequence that matters. Write 10-15 variants of the key message. Give yourself permission to include at least three options you’d normally kill early.

Screen the batch fast. Use whatever gives you speed: an internal review with scoring criteria, a quick poll on social, or a synthetic audience tool. The point is to go from fifteen to five in hours, not weeks. Budget-friendly copy testing alternatives exist now that didn’t three years ago — you don’t have to choose between rigor and speed.

Test the survivors properly. Take your top five and run them through a real test — A/B, panel, or structured feedback. You’ve already done the hard work of filtering. Now you’re investing in variants that earned their spot, not variants that survived a conference room debate.

Where Chorus Fits In

We built Chorus specifically because this workflow was impossible to do affordably with traditional tools. Chorus lets you test dozens of messaging variants against Virtual Voices — synthetic personas calibrated to real demographic and psychographic profiles. You upload your variants, pick a Testing Kit (say, “B2B SaaS Landing Page” or “Email Subject Lines”), and get structured feedback in minutes. Not weeks. Not after scheduling eight strangers in a room and hoping they show up. (Focus groups are wonderful — if you enjoy paying thousands to hear eight people politely agree with whoever talks loudest. We love them, truly.)

The Creative Check feature scores each variant on dimensions that matter — clarity, credibility, emotional pull, distinctiveness — so you can compare thirty options side by side instead of squinting at two bar charts. And because the Virtual Voices respond as specific audience segments, you see where message A wins with skeptical CTOs but message B wins with end users. That’s the signal most teams never get.

The Uncomfortable Math

Here’s what nobody wants to say out loud: if you’re testing fewer than ten messaging variants per campaign, you’re probably shipping your fourth-best option. Not because you’re bad at writing copy, but because the search space is too large to explore with small tests. The teams that win on messaging aren’t smarter writers. They just test more, faster, and cheaper. That’s not a secret. It’s just been hard to do — until now.

Want to test thirty messaging variants before lunch? Try Chorus and see what your audience actually thinks — not what your loudest stakeholder guesses.