Testing Kits and Bundles: How to evaluate content without building frameworks from scratch

Here’s a scenario you’ve probably lived:

You want feedback on your landing page. But what kind of feedback?

- Is the headline clear?

- Does the value prop land?

- Are the trust signals working?

- Is the CTA compelling?

- Does it feel credible?

- Will anyone actually scroll past the fold?

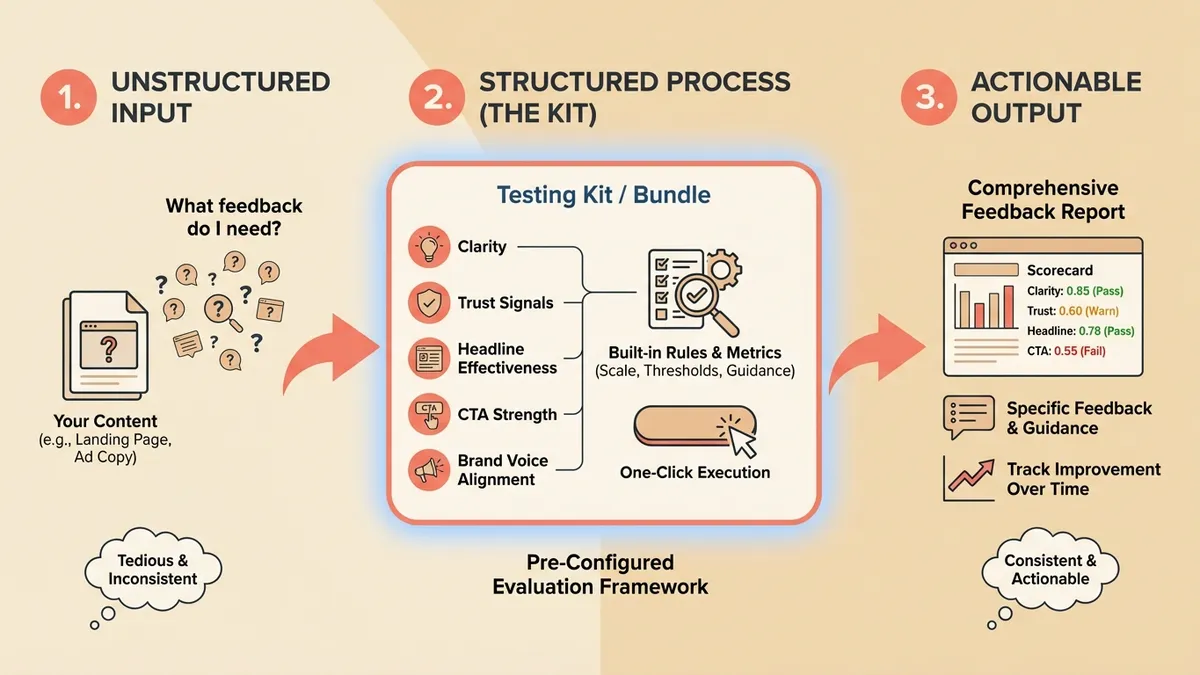

Each of these is a different evaluation. Running them individually is tedious. Building a custom framework every time is worse. This is why we built testing kits.

TL;DR

Testing Kits are pre-configured evaluation frameworks that check multiple dimensions at once—clarity, trust, headline effectiveness, CTA strength. One click, structured output, no framework fatigue.

Pick the kit for your content type and stop reinventing evaluation criteria every time.

What is Creative Testing?

Creative testing is the process of evaluating marketing content—ads, landing pages, headlines, video—before you spend budget on it. The industry uses various terms: creative pre-testing, ad testing, copy testing, or creative effectiveness testing. Whatever you call it, the goal is the same: find out if your creative will work before you learn the hard way.

When we talked to marketing teams, we heard the same pattern: they either skipped testing entirely (too slow, too expensive) or relied on gut feeling plus a quick poll of colleagues. Traditional creative testing tools like Kantar LINK AI or System1 offer rigorous evaluation, but the timelines and costs don’t fit how modern teams actually ship content.

That’s the gap Testing Kits are designed to fill. Instead of building evaluation frameworks from scratch or waiting weeks for research, you get pre-configured creative testing tools that run in minutes. The evaluation criteria already exist—you just choose which kit matches your content type.

What’s a testing kit?

A Testing Kit (sometimes called a creative scoring framework, ad testing rubric, or evaluation bundle in the industry—see our glossary for terminology) is a pre-configured set of evaluation criteria designed for a specific content type.

Instead of asking “evaluate this landing page,” you’re asking:

“Evaluate this landing page against the standard criteria that matter for landing pages: clarity, trust, headline effectiveness, CTA strength, and brand voice alignment.”

One click, multiple dimensions, structured output.

The building blocks: Evaluation criteria

Every testing kit is made of evaluation criteria—individual measurement frameworks that assess specific dimensions of your content.

Think of them like the Prime Directives from Star Trek: clear rules that keep feedback consistent across different situations and different evaluators.

Each evaluation criterion typically includes:

- Metrics: What exactly we’re measuring (e.g., “Opening Hook Effectiveness”)

- Scale: How we score it (usually 0-1)

- Thresholds: What counts as pass/warn/fail

- Guidance: What good looks like, what to avoid

- Questions: What to ask the evaluator

Here’s a real example from our Attention Capture criterion:

Metric: Opening Hook Effectiveness

Description: Ability of the first 3-5 seconds/lines to capture attention.

Pass: >= 0.75

Warn: >= 0.6 and < 0.75

Fail: < 0.6

DO:

- Use distinct visual or audio hooks that immediately signal value

- Pose a provocative question or strong lead statement

AVOID:

- Slow build or lengthy setup without immediate payoff

- Generic openers that could apply to any product

This isn’t vibes-based. It’s structured evaluation with clear criteria.

How testing kits combine criteria

A testing kit bundles multiple evaluation criteria into a coherent assessment.

For example, our Marketing Copy Baseline kit includes:

- Marketing Copy Core — Clarity, value prop, benefit communication

- Perceived Trust — Credibility signals, proof points, risk reduction

- Headline Effectiveness — Hook strength, promise clarity, curiosity triggers

- Brand Voice Alignment — Tone consistency, personality match

Run this kit against your landing page copy, and you get scores and feedback across all four dimensions—not just a single “it’s good” or “needs work.”

Why this matters

Consistency across tests

When you use the same criteria over time, you can actually track improvement.

“Last month our headline effectiveness was 0.58. After the rewrite, it’s 0.81.”

That’s actionable. That’s measurable. That’s how you build a feedback loop that actually compounds.

No framework fatigue

You don’t need to reinvent evaluation criteria every time. Pick the kit that matches your content type:

- Marketing Copy — Headlines, landing pages, ad copy

- Pitch Deck — Investor presentations, sales decks

- Social Post — LinkedIn, Instagram, organic and sponsored

- Email Campaign — Newsletters, drip sequences

- Blog/Article — SEO content, thought leadership

The hard work of defining “what good looks like” is already done.

Multiple perspectives, structured output

When you combine testing kits with virtual voices, you get:

- Multiple personas evaluating your content

- Against consistent, structured criteria

- With specific feedback and scores

- That you can compare across iterations

It’s the difference between “my coworker said it was confusing” and “3 out of 5 personas rated headline clarity below 0.6, citing jargon in the first sentence.”

Built-in vs. custom

Chorus ships with testing kits for common content types. But you can also:

- Customize existing kits — Add or remove criteria based on what matters to you

- Build custom criteria — Define your own metrics for brand-specific evaluation

- Create new kits — Bundle your custom criteria for reuse

Start with the defaults. Customize when you need to.

The practical workflow

Here’s how testing kits fit into a real workflow:

- Upload your content (landing page screenshot, video, copy doc)

- Select a testing kit (or let Chorus recommend one)

- Choose your panel (which virtual voices should evaluate)

- Run the test

- Review structured results (scores + qualitative feedback per dimension)

- Iterate (fix issues, re-run, compare)

Total time: minutes, not days.

What testing kits don’t do

Testing kits aren’t magic. They don’t:

- Guarantee your content will convert

- Replace real user testing

- Tell you what to write (only evaluate what you’ve written)

They’re a quality gate—a way to catch obvious problems before launch, and a framework for tracking improvement over time.

Want to see Chorus in action? Book a demo or see example reports.