Marketing research slow? The 3-2-1 loop to get answers in days (not weeks)

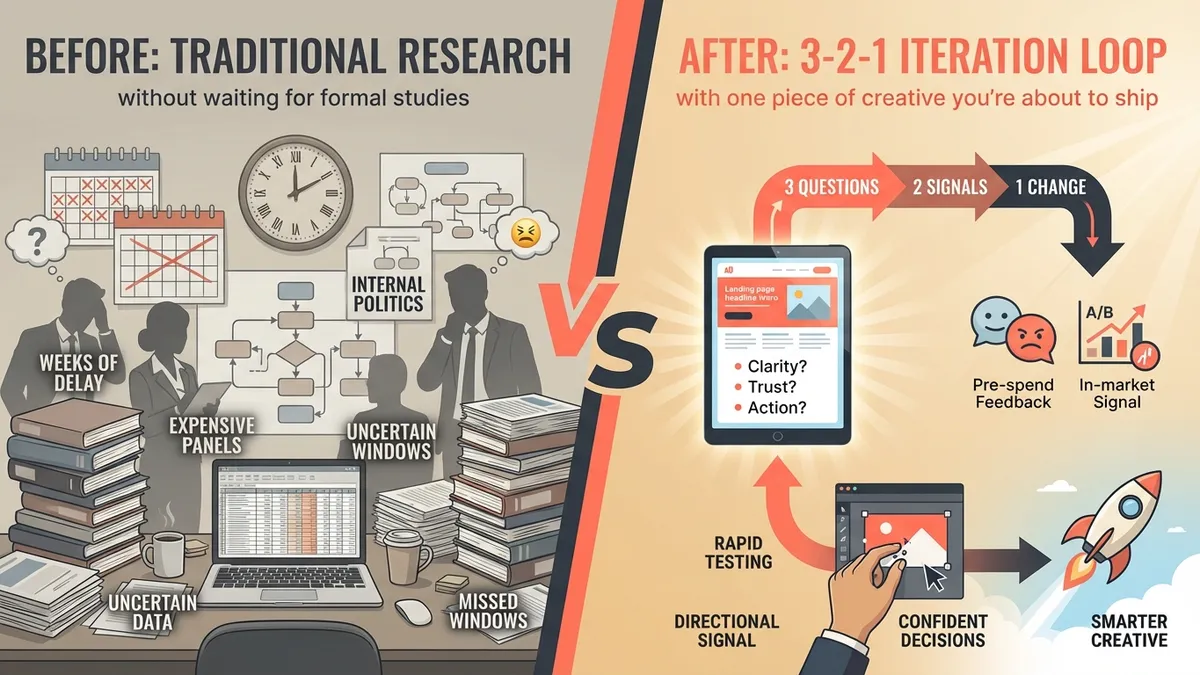

When we talk to marketers, the first thing they mention isn’t exciting strategy work—it’s waiting. If marketing research slow is the recurring theme in your org, it’s usually not because your team is disorganized. It’s because the system is built for events (panels, studies, decks), while modern go-to-market runs on iterations.

PMMs told us their research loop was often slower than their shipping loop. The result? Not insight—expensive guessing.

TL;DR

Marketing research is slow because it optimizes for legitimacy, not speed. The 3-2-1 loop (3 decision questions, 2 signal types, 1 intentional change) lets you iterate weekly without waiting for formal studies.

Try it once this week with one piece of creative you’re about to ship.

What is the 3-2-1 Research Loop?

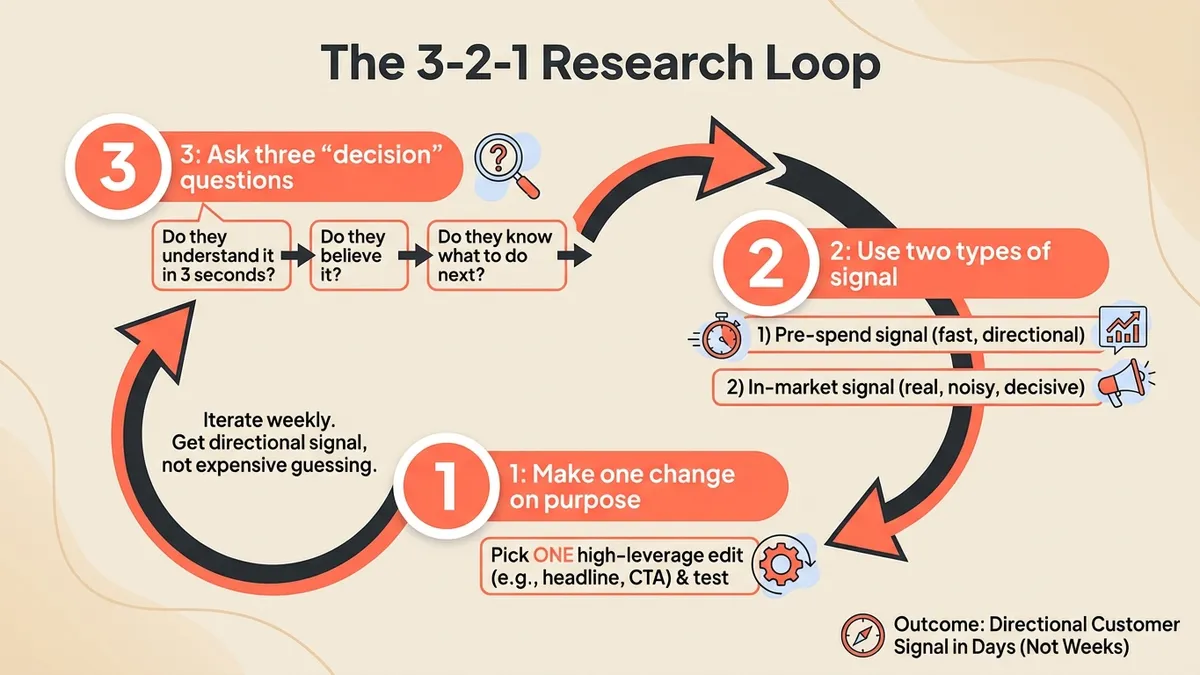

The 3-2-1 Research Loop is a lightweight framework to get marketing research answers in days instead of weeks. It consists of three decision questions you need answered, two types of signal (pre-spend feedback + in-market data), and one intentional change per cycle. The goal: keep research moving at the speed of creative without waiting for formal studies.

The hidden cost of slow research (it’s not just dollars)

Slow research taxes you in three places:

- Time: You ship late, or you ship without confidence.

- Spend: You run campaigns to learn things you could have learned before spend.

- Team sanity: The calendar becomes the bottleneck, and everyone quietly blames everyone else.

The worst part is the compounding effect: if it takes three weeks to get feedback, you don’t run one study—you run fewer studies, with higher stakes, and more politics. Which is how you end up paying a lot of money to hear: “It’s fine.”

Focus groups are great—if you enjoy paying for snacks and ambiguity.

Marketing research slow? It’s slow by design

Most research processes optimize for legitimacy, not latency. They’re built to produce something that feels defensible in a meeting: a deck, a sample size, a methodology section, a timeline you can point at when someone asks “are we sure?”

That’s not wrong—we like defensible. But it creates a predictable failure mode for fast teams: by the time you have “real research,” your campaign has already shipped, missed the window, or been “paused for learnings” (marketing’s gentlest euphemism).

Growth and PMM teams told us what they needed day-to-day wasn’t a quarterly study. It was directional signal that made the next iteration smarter.

The 3-2-1 Research Loop (a framework your calendar can handle)

We like systems. So here’s a simple one. The 3-2-1 Research Loop is a lightweight way to keep research moving at the speed of creative.

3: Ask three “decision” questions (not research questions)

Before you test anything, write the three questions you actually need answered. Not “What do people think of this?” but:

- Do they understand it in 3 seconds?

- Do they believe it?

- Do they know what to do next?

When those questions are clear, the output becomes useful. When they’re vague, you get… vibes.

2: Use two types of signal

Most teams over-index on a single signal type and call it “data-driven.” You want two: pre-spend signal (fast, directional) and in-market signal (real, noisy, decisive).

Pre-spend signal catches obvious failures early—jargon, trust gaps, message mismatch, missing proof, unclear CTA. In-market signal tells you what happens in reality.

This is not either/or. It’s a relay race. You don’t replace experiments—you stop wasting experiments on fixable basics.

1: Make one change on purpose

The fastest way to keep research slow is to “change everything” and then ask why performance changed. Pick one high-leverage edit—a headline rewrite, a proof point swap, a CTA reframing, or an above-the-fold restructure. Then test. Then learn. Then repeat.

If you can’t write the learning in one sentence, the loop broke somewhere.

A quick example (so this isn’t just philosophy)

Say you’re about to launch a new landing page. You’ve got a headline, a hero section, and a CTA. You also have a Slack thread with 19 opinions and zero decisions. Run the 3-2-1 loop like this:

3 questions

- Can a skeptical buyer explain what this is in 3 seconds?

- Does anything feel like a big claim with no proof?

- Is the next step obvious and low-friction?

2 signals

- Pre-spend: two outside perspectives (one skeptical, one enthusiastic)

- In-market: the experiment you were going to run anyway

1 change

Pick the highest-leverage fix you can make in 20 minutes. Usually it’s one of these: replace a vague claim with a concrete mechanism or proof point, move the “who it’s for” higher, or make the CTA say what happens after the click.

Now the in-market test is answering a better question—not “is this page good?” but “did this specific fix remove the top point of friction?”

What to do when you don’t have time for “real research”

This is what marketers told us they felt guilty about: knowing they should do research, but shipping weekly—sometimes daily. Here’s what we learned: you don’t need perfect research to make better decisions.

You need a repeatable loop that catches the obvious issues early, reduces internal opinion wars, and makes your next test less random. Think of it like Minority Report, but for creative feedback instead of crime: you’re not predicting the future—you’re preventing avoidable mistakes.

Where Chorus fits in (without pretending we’re a crystal ball)

We built Chorus for the “research latency” problem. Chorus gives you directional, perspective-driven feedback fast by running your creative through Virtual Voices (audience-aligned perspectives) and structured evaluation criteria (so feedback stays consistent and comparable).

The output isn’t a data dump—it’s a report designed to answer: “What should we do next?”

To be clear: Chorus doesn’t replace statistically-valid panels or deep qualitative work. It’s built for the work you do between those moments—weekly iterations, new hooks, landing page refreshes, “we need one winner this month” sprints.

How to start (without overthinking it)

Try this once this week:

- Pick one piece of creative you’re about to ship (ad, landing page, email, video).

- Write your three decision questions (clarity, trust, action).

- Get pre-spend feedback from two perspectives you care about (skeptical buyer vs enthusiastic buyer is a good start).

- Make one change on purpose.

- Ship, then compare what you expected to what happened.

That’s the loop. Run it three times and you’ll feel the difference—not because you got “perfect insight,” but because you got momentum.

Want to see Chorus in action? Book a demo or see example reports.