Synthetic Focus Groups: What's in an AI Focus Group Report (And Why It's Different)

Your focus group got cancelled because three participants had “car trouble” and one ghosted entirely. The recruiter wants another $2,000 to reschedule. Your campaign launches in nine days.

TL;DR

Chorus Focus Group Research runs your creative through up to 12 Virtual Voices—each built from demographic, psychographic, and behavioral data—who answer questions tailored to your content and evaluation goals, delivering scored insights and verbatim quotes in under an hour.

You get the depth of qualitative research without the scheduling chaos, recruitment costs, or three-week timelines.

The Focus Group Problem Nobody Talks About

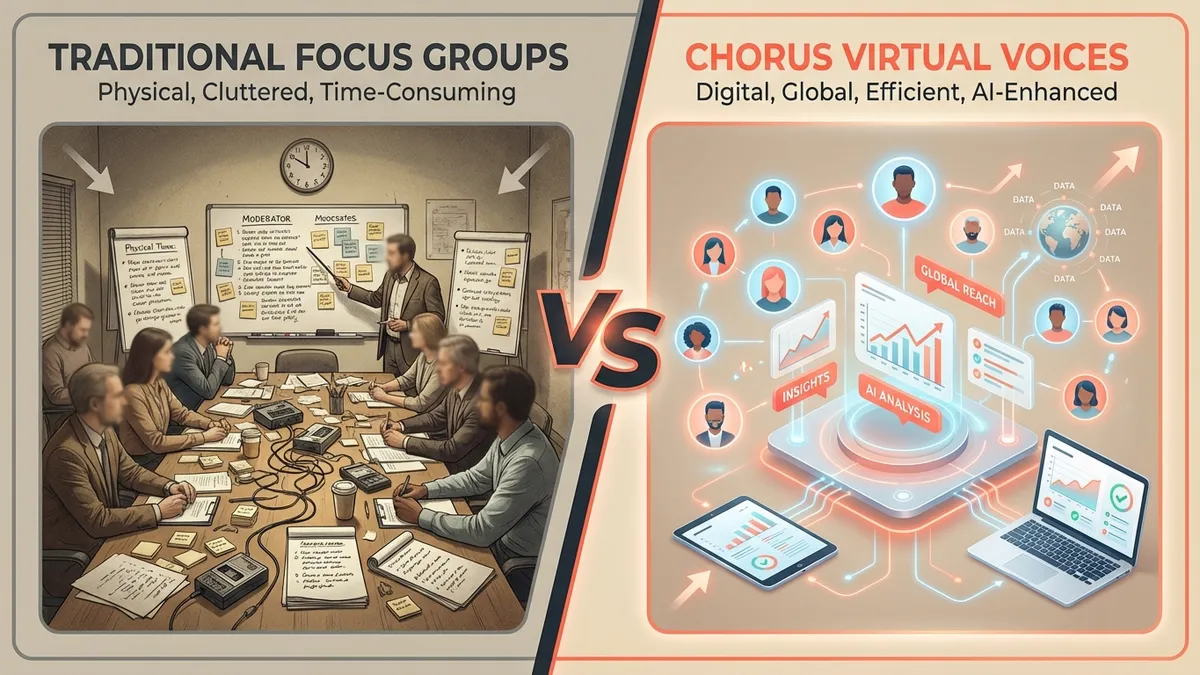

Customers kept telling us the same horror stories about traditional focus groups. One spent $15,000 on a single session only to have one loud participant dominate the conversation while everyone else nodded along. This “groupthink” effect is a well-documented problem in qualitative research—and the quiet person in the corner who actually represents your target buyer? They said three words the entire session.

We built Chorus because marketers kept telling us they loved the depth of qualitative research but hated everything else about it. The recruiting. The scheduling. The facilities that smell like stale coffee and broken dreams. The moderator who asks leading questions because they’re trying to wrap up before lunch. It’s like trying to study the Fremen by hosting them in a Harkonnen boardroom—the context corrupts the data.

Are Focus Groups Still Effective?

Yes—but not the way they used to be. Traditional focus groups remain effective for exploratory research where you genuinely don’t know what questions to ask, for emotionally complex topics where human nuance matters, and for building internal buy-in (sometimes stakeholders need to see real humans react).

But for creative testing? The evidence is mixed. Research teams we talked to described focus groups as “expensive directional signals at best.” The problems are structural: small sample sizes (8–12 people), groupthink dynamics, moderator bias, and participants who perform rather than react authentically.

Are focus groups worth it? For creative evaluation specifically, we’d argue the ROI has shifted. The same budget that buys one traditional focus group session can run dozens of synthetic focus group tests across multiple creative variants. You trade some human unpredictability for consistency, speed, and the ability to actually iterate before launch.

The question isn’t “focus groups vs. no research”—it’s “which research method fits the question you’re asking and the timeline you have?”

What is Focus Group Research in Chorus?

Focus Group Research in Chorus is what the industry calls a synthetic focus group or AI focus group—it assembles a panel of up to 12 Virtual Voices (synthetic respondents built from demographic, psychographic, and behavioral data) and has them evaluate your creative as if they encountered it in the wild. (New to these terms? See our creative testing glossary.) Each Virtual Voice brings a distinct perspective: their age, income, values, media habits, and brand relationships all shape how they respond. Think of it as a focus group where nobody’s trying to impress the moderator, nobody’s distracted by the free sandwiches, and nobody gives you polite non-answers because they want to go home.

The Virtual Voices aren’t generic “AI respondents.” Each one is constructed from research data to represent specific audience segments you actually care about. A skeptical Gen-X professional evaluates your ad differently than an optimistic millennial early adopter. A price-conscious parent responds differently than a status-seeking executive. You get the variance that makes qualitative research valuable, without the variance that makes it unreliable (like “my Uber was late so I’m in a bad mood”).

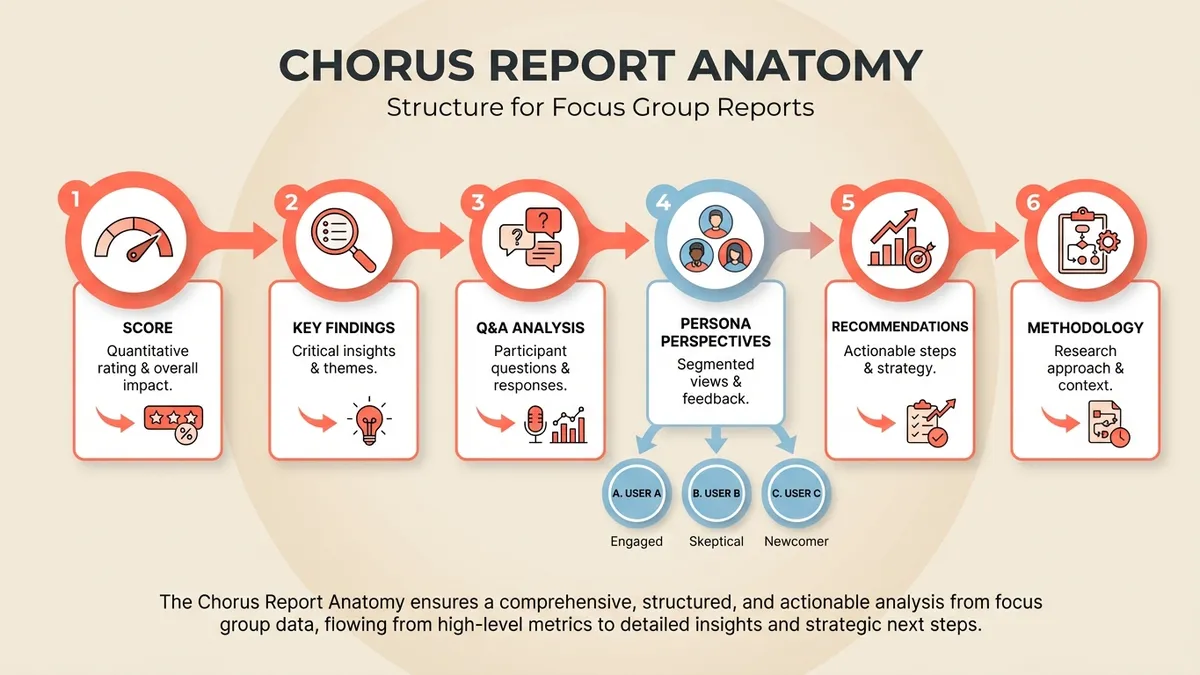

What’s Inside a Focus Group Report?

Let’s walk through what you actually receive. We ran a Coinbase video ad through Chorus, and here’s the anatomy of the report.

Overall Score and Sentiment Breakdown: The report opens with an aggregate score (7.1/10 in the Coinbase example) and a sentiment breakdown showing how Virtual Voices felt across key dimensions. This isn’t a vanity metric—it’s triangulated from every scored question in your chosen rubrics, so you can see exactly where that number comes from. The Coinbase ad scored well on brand differentiation and memorability but showed weakness on trust signals and call-to-action clarity.

Key Findings Summary: Before you dive into the details, you get a synthesized view of what worked versus what needs work. For Coinbase, Virtual Voices responded positively to the brand’s positioning against traditional finance but flagged concerns about whether the ad gave them enough reason to act. This is the “what do I tell my boss in the elevator” section—except it’s backed by actual data instead of your gut feeling and a prayer.

Question-by-Question Analysis: Here’s where the depth lives. Each question from your selected rubrics gets its own breakdown: aggregate score, score distribution across Virtual Voices, and representative quotes. You’re not just seeing “7.2 on message clarity”—you’re seeing which Virtual Voices found it crystal clear versus which thought it was trying too hard. The quotes let you hear the reasoning in their own words. One Virtual Voice might say the CTA felt “aggressive for a first impression” while another calls it “appropriately confident.” Both are useful. Neither would have spoken up in a room full of strangers eating pastries.

Individual Virtual Voice Perspectives: Each Virtual Voice gets a dedicated section showing their background (who they are, what they value, how they make decisions) and their key takeaways from your creative. This is where pattern recognition happens. When your skeptical Virtual Voice and your enthusiastic one both flag the same concern, that’s a signal. When only your high-income segment responds to a luxury positioning, that’s targeting insight. Traditional focus groups rarely give you clean persona-level analysis because real humans don’t fit neatly into segments—they’re influenced by what everyone else just said.

Actionable Recommendations: The report closes with specific, prioritized recommendations based on the aggregate findings. Not “consider improving trust signals” but “add a specific security credential or social proof element in the first 10 seconds to address the trust gap identified by the majority of Virtual Voices.” This is where the rubber meets the road—or where the spice meets the worm, depending on your reference frame.

Research Methodology: Finally, you get transparency on how the sausage is made. Which Virtual Voices were included, what questions were asked, how scoring was calculated. We included this because customers told us they were tired of black-box research that couldn’t explain its own conclusions. If you can’t see the methodology, you can’t trust the findings. That’s true for traditional research too, but good luck getting a recruiting agency to explain their participant screening criteria.

How This Differs from Traditional Focus Groups

The obvious differences: no recruitment, no scheduling, no facilities, no moderator bias, no groupthink, no results in three weeks. But the deeper difference is consistency. Run the same creative through Chorus twice and you’ll get nearly identical results. Run it through two traditional focus groups and you might get opposite conclusions depending on who showed up and what they had for breakfast.

This doesn’t mean Chorus replaces all qualitative research—some questions genuinely require human participants, especially for novel product categories or deeply emotional topics. But for creative evaluation, message testing, and audience response prediction, the signal-to-noise ratio in Chorus is dramatically better. Multiple customers told us they’ve dramatically reduced traditional focus group usage after adopting Chorus, reallocating that budget to actually making more creative variants to test.

Focus Group Alternatives: What Are Your Options?

When marketing teams search for focus group alternatives, they’re usually trying to solve one of three problems: cost (traditional focus groups run 20,000 per session), speed (3–6 week timelines don’t match sprint cycles), or reliability (the groupthink problem we mentioned earlier).

Here’s how the landscape breaks down:

| Alternative | Best For | Limitation |

|---|---|---|

| Online surveys | Quantitative validation at scale | No depth, no “why” |

| 1:1 user interviews | Deep qualitative insight | Expensive, slow, small sample |

| Unmoderated testing (UserTesting, etc.) | UX feedback, task completion | Less suited for creative/messaging |

| AI creative scoring (Kantar LINK AI, System1) | Predictive ad performance | Quantitative only, no verbatims |

| Synthetic focus groups (Chorus, OpinioAI) | Qualitative depth + speed | Newer category, requires trust-building |

We built Chorus because none of the existing alternatives gave marketers what focus groups actually provide: multiple perspectives, qualitative depth, and the ability to hear how people talk about your creative—not just whether they’d click. Synthetic focus groups preserve that depth while eliminating the logistical nightmare.

Where Chorus Fits In

Focus Group Research is one of three testing modes in Chorus, alongside Quick Evaluation (fast creative scoring) and Deep Analysis (comprehensive strategic review). Most customers start with Quick Evaluation for rapid iteration, then run Focus Group Research on their top two or three concepts before committing to production. It’s the “get diverse perspectives” step before you spend real money.

The reports export to PDF for stakeholder sharing, and all the underlying data is available for your own analysis if you want to slice it differently. We designed it for the workflow marketers actually have: tight timelines, skeptical stakeholders, and never enough budget to test everything you want to test.

Want to see what a full Focus Group report looks like? Check out our Coinbase video ad analysis—the same report we walked through above.

Ready to run your own? Book a demo and we’ll show you how Chorus works with your creative.